TrendDev Job Market Analyzer

TrendDev Job Market Analyzer

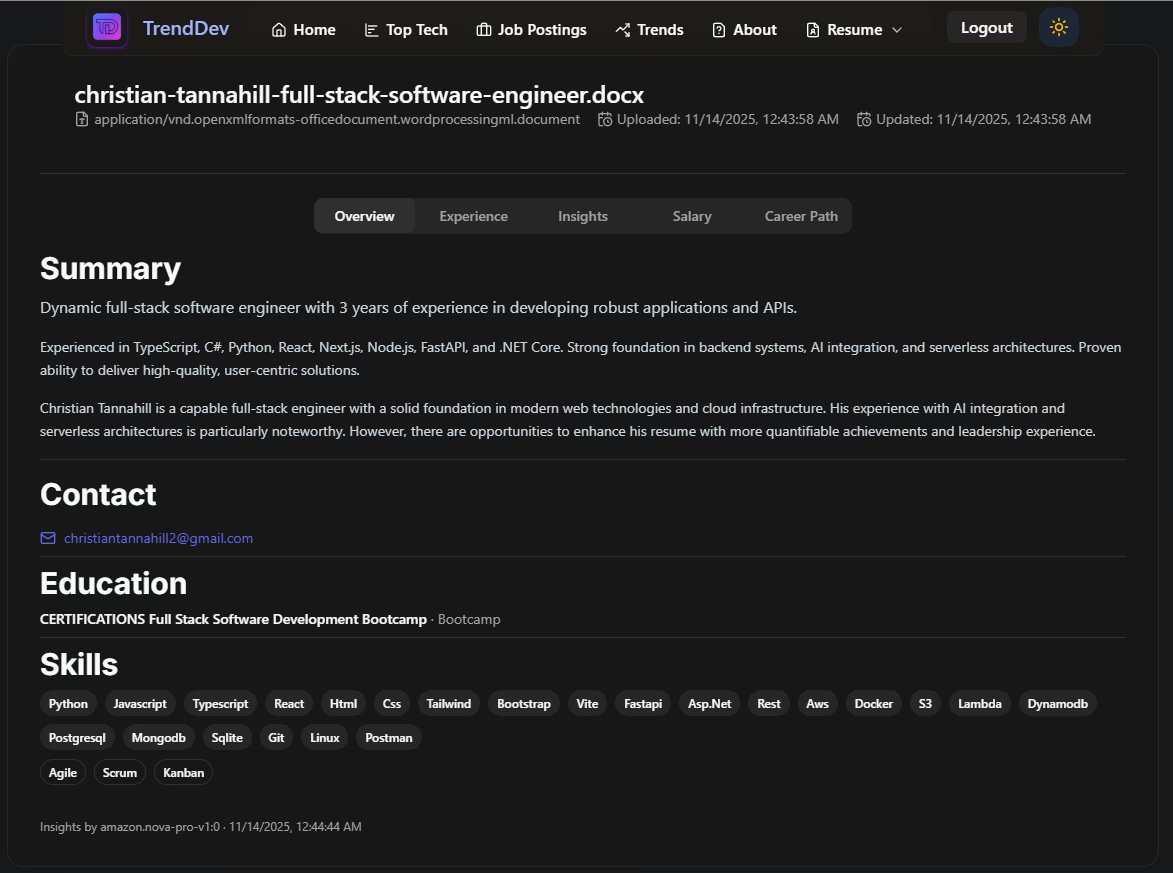

TrendDev is my end-to-end intelligence layer for the job market: Lambda-based collectors pull postings from Muse, Greenhouse, Lever, and USAJobs, Bedrock enriches each role with normalized skills and salary signals, Neon Postgres powers heavy analytics, and a React + Vite frontend hosted on AWS Amplify turns everything into an interactive experience for candidates. Cognito secures the experience, EventBridge schedules the pipelines, SQS drives asynchronous resume processing, and CloudWatch keeps telemetry for every hop.

🛠️Technology Stack

Product Overview

Job Market Analyzer solves three pain points simultaneously:

- Fresh, deduped job data - ingest-jobs fans out to Muse/Greenhouse/Lever/USAJobs, hashes each posting, and writes canonical records into DynamoDB (with an optional archival drop to S3 by source).

- Structured intelligence - bedrock-ai-extractor (Nova Pro) and openrouter-ai-enhancement-from-table run nightly via EventBridge to normalize titles, work modes, salary hints, technologies, and requirements, while calculate-job-stats pushes aggregate counts into the job-postings-stats table for the UI hero.

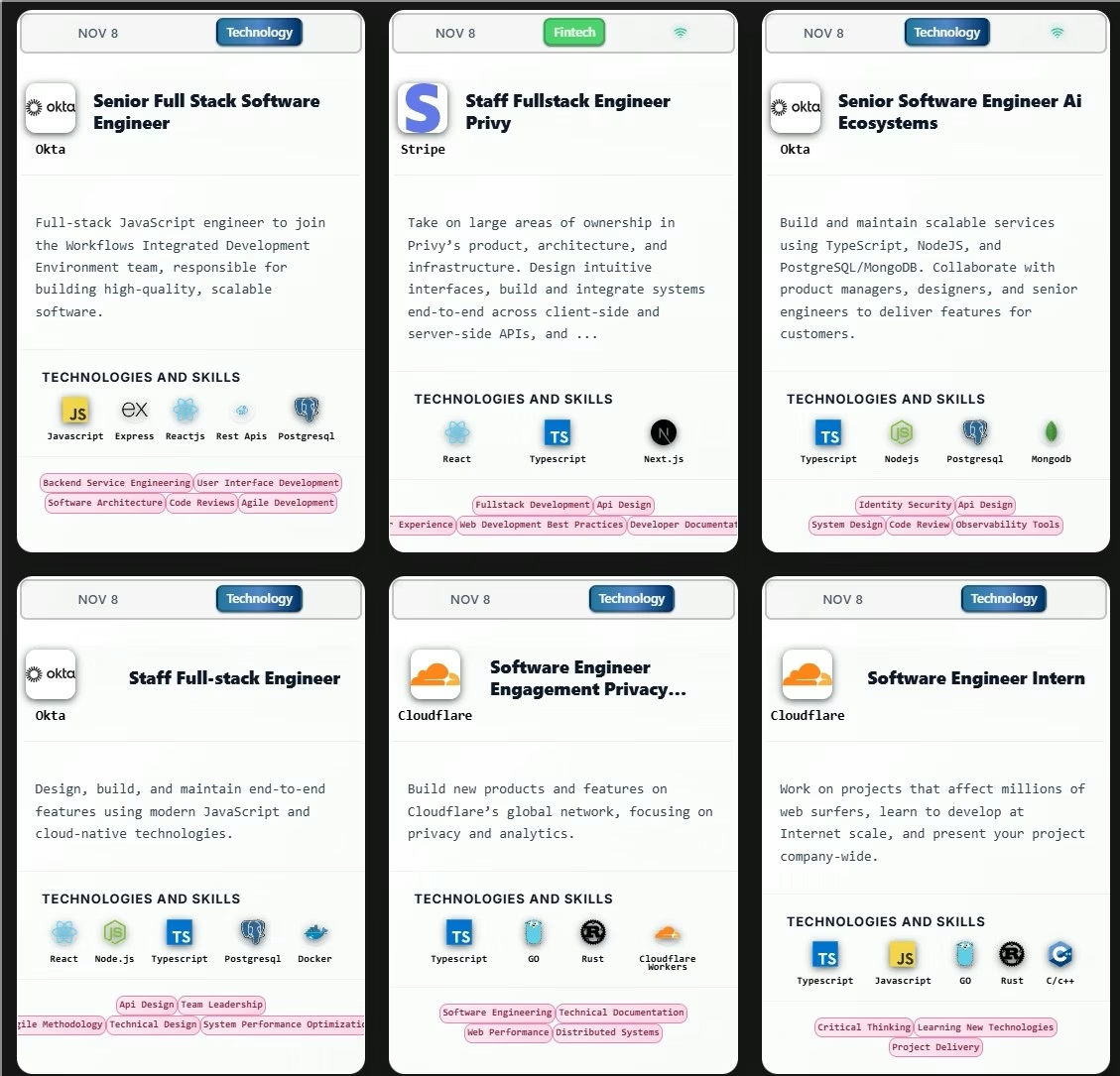

- Actionable UX - The React console exposes /postings (infinite-scroll job list), /trends (skill and technology trend explorer), /top-tech, and /resumes flows. Amplify Storage + pre-signed URLs handle uploads, Cognito secures access, and SQS decouples resume analysis.

✨Experience Highlights

Infinite job feed

TanStack Query + the Dynamo-backed **get-job-postings-paginated** Lambda provide cursor-based pagination, seniority filters, remote-mode filters, and fast tech search chips.

Job drill-downs

Dedicated detail routes hydrate Bedrock-enriched descriptions, co-occurring skills, salary ranges, and CTA links.

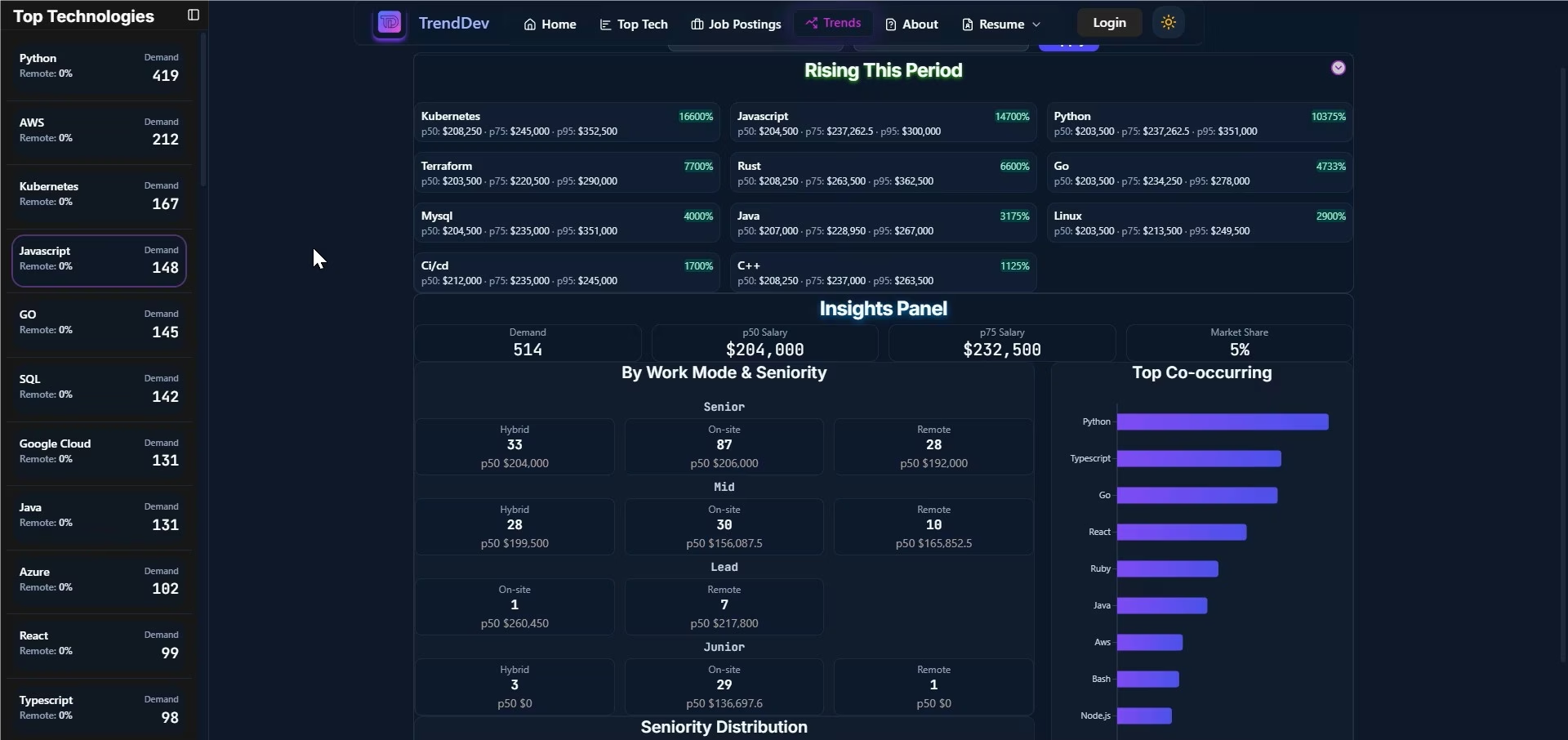

Top technologies view

Neon SQL endpoint (**get-job-postings-paginated-neon**) powers deterministic filtering and sorting for enterprise candidates.

Architecture Notes

- Multi-source ingestion. job-posting-aggregator scrapes up to 500 Muse pages per run, strips HTML with Cheerio, and writes JSON into S3 (job-postings-bucket-cstannahill). ingest-jobs adapters (Muse, Greenhouse, Lever, USAJobs) normalize every record, hash descriptions, dedupe via Dynamo batch gets, and optionally archive raw payloads.

- Normalization + enrichment. normalize-tables takes Dynamo records flagged as "pending normalization", massages fields (location, salary, skill arrays), and upserts them into Neon jobs, technologies, and jobs_technologies. bedrock-ai-extractor processes batches of 50 files, calling amazon.nova-pro-v1:0 and storing results in job-postings-enhanced. aggregate-skill-trends + aggregate-skill-trends-v2 roll those into skill-trends-v2 with co-occurrence matrices, remote shares, and salary percentiles.

- Analytics + APIs. calculate-job-stats uses Neon queries to derive total postings and top technologies (top 500). get-trends-v2 exposes /v2/trends/technologies/top, /rising, /technology/name, and /overview. API Gateway stacks (api/http-api, api/rest-api, api/sqs-jobs-api) route traffic to the right Lambda or queue. EventBridge cron rules trigger ingestion, enrichment, normalization, and stats jobs daily; CloudWatch retains structured JSON logs for each Lambda via the lightweight logger helpers.

- Authentication + UX. auth-register, auth-login, auth-verify-email, auth-get-current-user, and cognito-post-confirmation wrap Cognito operations so the frontend can stay plain React Query + fetch. Zustand stores guard routes, and useAuthInitialization ensures no protected view renders until Cognito tokens are hydrated. Amplify hosts the SPA and handles branch previews; Amplify Storage helpers plus pre-signed URLs keep the resume upload path entirely serverless.

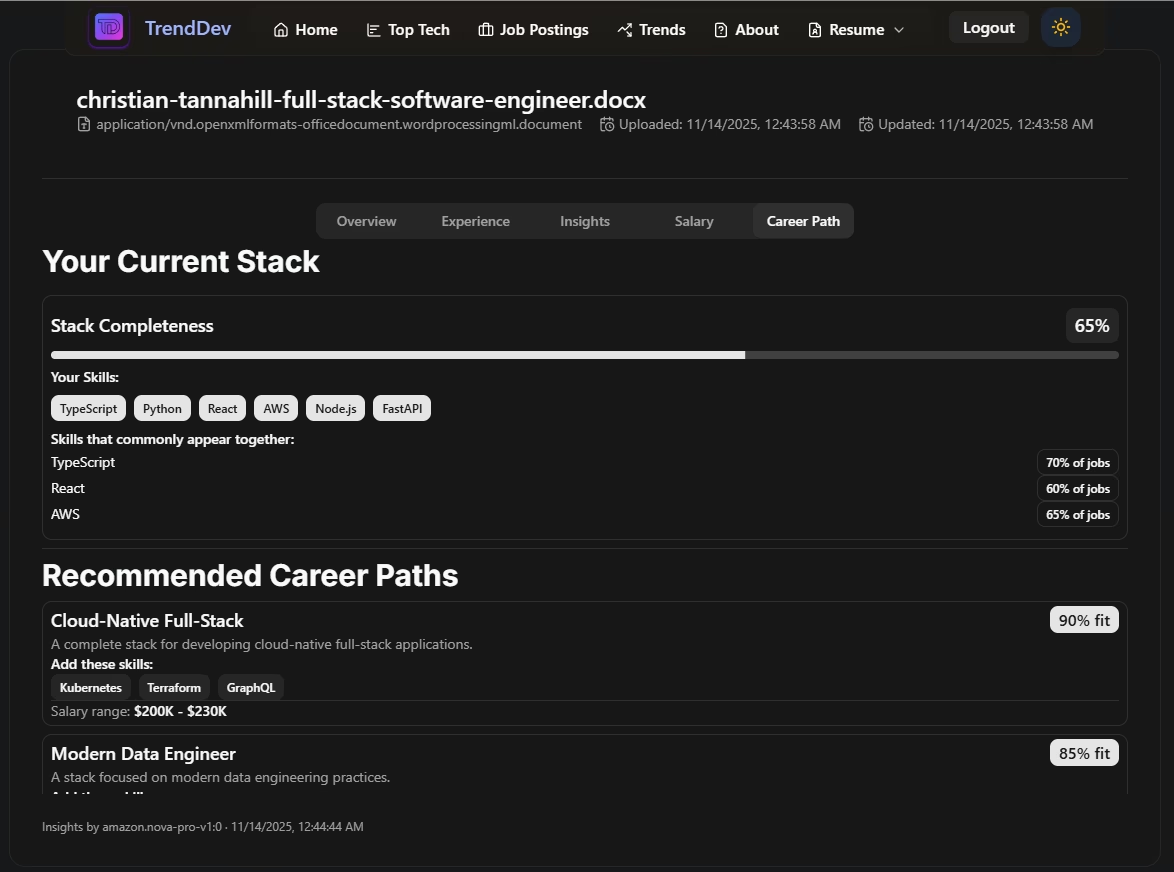

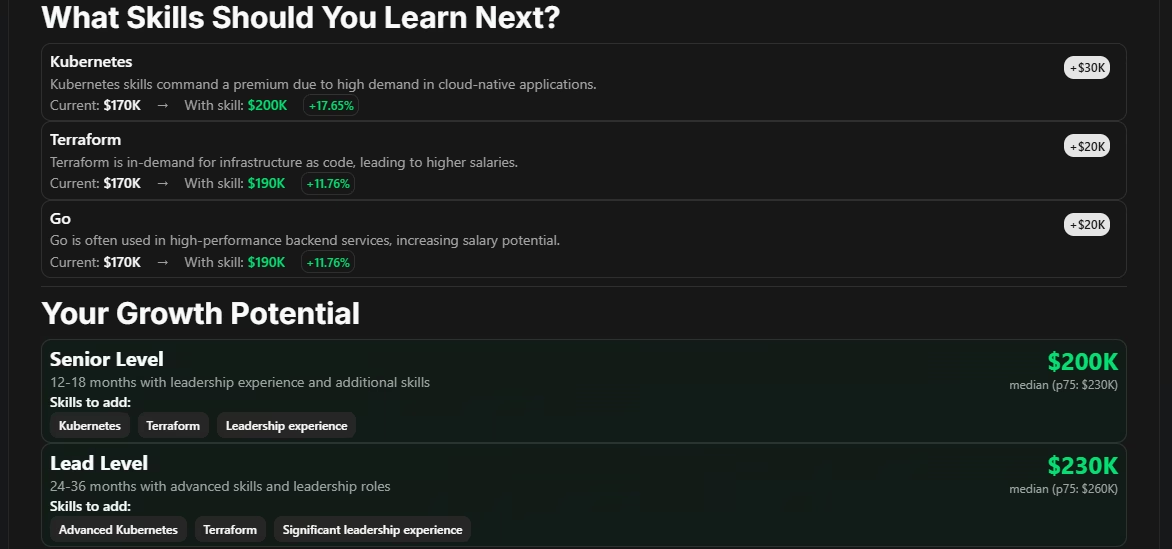

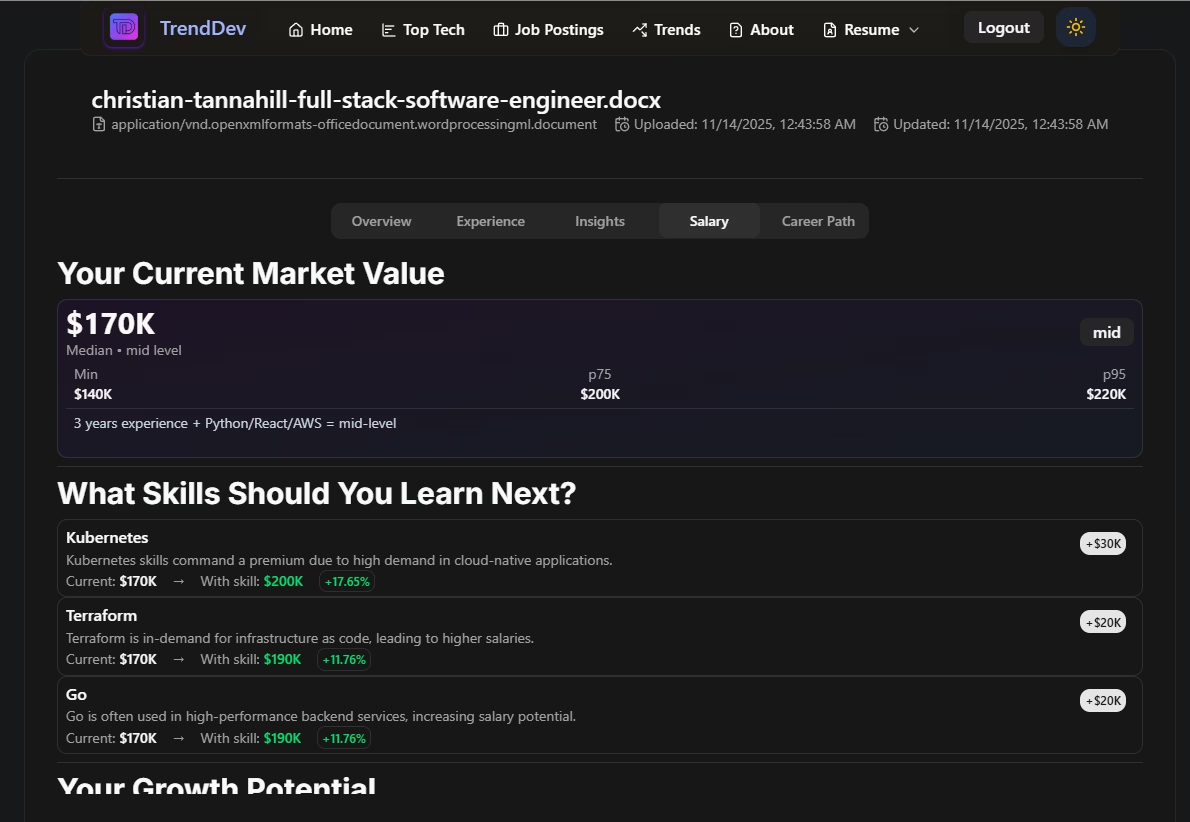

- Async resume workflow. resume-presigned-url issues S3 URLs, uploads land in per-user prefixes, compare-resume-id creates the job record and sends a message to SQS, and worker-process-resume handles extraction, Bedrock normalization, salary lookups, and writes insights back to Dynamo. get-job-processing-status exposes the job's state so the frontend polling loop can resolve to "complete", "processing", or "failed".

- Packaging & deployment. The root zip.js script zips any Lambda folder consistently, and scripts/packageLambdas.js can iterate every lambda. That keeps CI simple and ensures the 20+ functions (auth, ingestion, resume, stats, AI, APIs) all ship with the same structure.

Visual Story

Delivery Timeline

📅Development Journey

Ingestion foundation

Built the Muse + Greenhouse + Lever adapters, centralized packaging (**zip.js**), and S3 archival path.

AI enrichment & trends v2

Hooked up Bedrock Nova batches, Dynamo trend tables, Neon replicas, and the v2 trend endpoints.

Resume intelligence

Introduced Amplify-backed uploads, Cognito-guarded routes, SQS worker, and Bedrock-powered resume insights.

UX polish & observability

Shipped the new landing hero, Top Tech board, Trends v2 UI, CloudWatch dashboards, and Amplify-hosted previews.

Project Metrics

📊Impact by the Numbers

λLambda services

⏱Job ingestion window

֎🇦🇮LLM enrichment batch

⚙️Technologies tracked

Challenges & Solutions

Normalizing multi-source job data

MediumData EngineeringChallenge:

Each source (Muse, Greenhouse, Lever, USAJobs) exposes different pagination, schemas, and HTML-heavy descriptions, which made deduplication and enrichment error prone.

Solution:

Centralized adapters feed **ingest-jobs**, the handler hashes descriptions, batch-checks Dynamo for existing postings, sanitizes content with Cheerio, and archives raw JSON when configs request it.

Impact:

Reduced duplicate postings and let the enrichment pipeline focus on truly new or changed jobs, lowering Dynamo + Bedrock spend.

Balancing AI quality and cost

HardAI/MLChallenge:

Bedrock Nova is accurate but expensive, and batches larger than ~50 postings risk timeouts. Resume insights also need context from market trends.

Solution:

Processed S3 files in MAX_FILES_PER_RUN=50 batches, cleaned model output (JSON guards, deduped arrays), and augmented prompts with Neon + Dynamo stats so the LLM can anchor salary and stack insights.

Impact:

Reliable enrichment that stays within the Lambda timebox while producing structured skill, tech, benefit, and salary fields consumed across the app.

Asynchronous resume experience

MediumResume IntelligenceChallenge:

Uploading multi-MB resumes directly to Lambdas was slow, and users needed status feedback while AI processing ran asynchronously.

Solution:

Amplify Storage issues pre-signed PUT URLs, the SPA tracks upload progress, **compare-resume-id** queues jobs in SQS, and a worker Lambda updates Dynamo statuses that the frontend polls via **/jobs/{jobId}**.

Impact:

Uploads finish in seconds, resume insights return in under four minutes, and CloudWatch logs expose every state change for support.

AWS Services in Play

- SQS & API Gateway (SQS proxy) - queueing resume jobs and exposing /jobs 202 endpoints so the UI can poll.

- EventBridge - cron triggers for scraping, enrichment, normalization, and stats Lambdas to keep data fresh without manual intervention.

- Cognito - user registration, login, verification, and session refresh handled by dedicated Lambdas so the SPA stays framework agnostic.

- Amplify - hosts the Vite build, handles branch previews, and wires Amplify Storage helpers for direct S3 uploads.

- CloudWatch - captures the JSON log lines emitted by helpers inside ingest-jobs, get-trends-v2, and the resume worker, plus dashboards for success/error counts.

The result is a data-backed portfolio piece that demonstrates how I design resilient ingestion pipelines, layer GenAI responsibly, and deliver polished UX for candidates navigating the market.